Learn by Benchmarking Ruby App Servers Badly

/(Hey! I usually post about learning important, quotable things about Ruby configuration and performance. THIS POST IS DIFFERENT, in that it is LESSONS LEARNED FROM DOING THIS BADLY. Please take these graphs with a large grain of salt, even though there are some VERY USEFUL THINGS HERE IF YOU’RE LEARNING TO BENCHMARK. But the title isn’t actually a joke - these aren’t great results.)

What’s a Ruby App Server? You might use Unicorn or Thin, Passenger or Puma. You might even use WEBrick, Ruby’s built-in application server. The application server parses HTTP requests into Rack, Ruby’s favored web interface. It also runs multiple processes or threads for your app, if you use them.

Usually I write about Rails Ruby Bench. Unfortunately, a big Rails app with slow requests doesn’t show much difference between the app servers - that’s just not where the time gets spent. Every app server is tolerably fast, and if you’re running a big chunky request behind it, you don’t need more than “tolerably fast.” Why would you?

But if you’re running small, fast requests, then the differences in app servers can really shine. I’m writing a new benchmark so this is a great time to look at that. Spoiler: I’m going to discover that the load-tester I’m using, ApacheBench, is so badly behaved that most of my results are very low-precision and don’t tell us much. You can expect a better post later when it all works. In the mean time, I’ll get some rough results and show something interesting about Passenger’s free version.

For now, I’m still using “Hello, World”-style requests, like last time.

Waits and Measures

I’m using ApacheBench to take these measurements - it’s a common load-tester used for simple benchmarking. It’s also, as I observed last time, not terribly exact.

For all the measurements below I’m running 10,000 requests against a running server using ApacheBench. This set is all with concurrency 1 — that is, ApacheBench runs each request, then makes another one only after the first one has returned completely. We’ll talk more about that in a later section.

I’m checking not only each app server against the others, but also all of them by Ruby version — checking Ruby version speed is kinda my thing, you know?

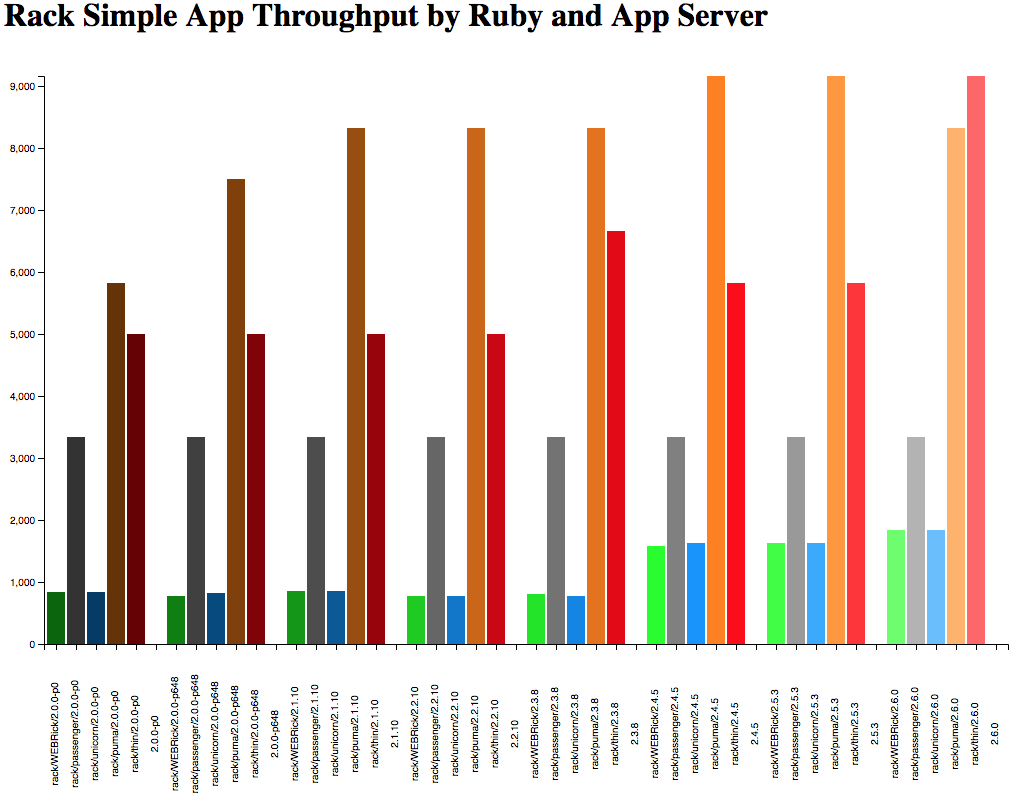

So: first, let’s look at the big graph. I love big graphs - that’s also kinda my thing.

You can click to enlarge the image, but it’s still pretty visually busy.

What are we seeing here?

Quick Interpretations

Each little cluster of five bars is a specific Ruby version running a “hello, world” tiny Rails app. The speed is averaged from six runs of 10k HTTP requests. The five different-colored bars are for (in order) WEBrick (green), Passenger (gray), Unicorn (blue), Puma (orange) and Thin (red). Is it just me, or is Thin way faster than you’d expect, given how little we hear about it?

The first thing I see is an overall up-and-to-the-right trend. Yay! That means that later Ruby versions are faster. If that weren’t true, I would be sad.

The next thing I see is relatively small differences across this range. That makes some sense - a tiny Rails app returning a static string probably won’t get much speed advantage out of most optimizations. Eyeballing the graph, I’m seeing something around 25%-40% speedup. Given how inaccurate ApacheBench’s result format is, that’s as nearly exact as I’d care to speculate from this data (I’ll be trying out some load-testers other than ApacheBench in future posts.)

(Is +25% really “relatively small” as a speedup for a mature language? Compared to the OptCarrot or Rails Ruby Bench results it is! Ruby 2.6 is a lot faster than 2.0 by most measures. And remember, we want three times as fast, or +200%, for Ruby 3x3.)

I’m also seeing a significant difference between the fastest and slowest app servers. From this graph, I’d say in order the fastest are Puma, Thin and Passenger, in that order, at the front of the pack. The two slower servers are Unicorn and WEBrick - though both put in a pretty respectable showing at around 70% of the fastest speeds. For fairly short requests like this, the app server makes a big difference - but not “ridiculously massive,” just “big."

But Is Rack Even Faster?

In Ruby, a Rack “Hello, World” app is the fastest most web apps get. You can do better in a systems language like Java, but Ruby isn’t built for as much speed. So: what does the graph look like for the fastest apps in Ruby? How fast is each app server?

Here’s what that graph looks like.

What I see there: this is fast enough that ApacheBench’s output format is sabotaging all accuracy. I won’t speculate exactly how much faster these are — that would be a bad idea. But we’re seeing the same patterns as above, emphasized even more — Puma is several times faster than WEBrick here, for instance. I’ll need to use a different load-tester with better accuracy to find out just how much faster (watch this space for updates!)

Single File Isn’t the Only Way

Okay. So, this is pretty okay. Pretty graphs are nice. But raw single-request speed isn’t the only reason to run a particular web server. What about that “concurrency” thing that’s supposed to be one of the three pillars of Ruby 3x3?

Let’s test that.

Let’s start with just turning up the concurrency on ApacheBench. That’s pretty easy - you can just pass “-c 3” to keep three requests going at once, for instance. We’ve seen the equivalent of “-c 1” above. What does “-c 2” look like for Rails?

Here:

That’s interesting. The gray bars are Passenger, which seems to benefit the most from more concurrency. And of course, the precision still isn’t good, because it’s still ApacheBench.

What if we turn up the concurrency a bit more? Say, to six?

The precision-loss is really visible on the low end. Also, Passenger is still doing incredibly well, so much so that you can see it even at this precision.

Comments and Caveats

There are a lot of good reasons for asterisks here. First off, let’s talk about why Passenger benefits from concurrency so much: a combination of running multiprocess by default and built-in caching. That’s not cheating - you’ll get the same benefit if you just run it out of the box with no config like I did here. But it’s also not comparing apples to apples with other un-configured servers. If I built out a little NGinX config and did caching for the other app servers, or if I manually turned off caching for Passenger, you’d see more similar results. I’ll do that work eventually after I switch off of ApacheBench.

Also, something has to be wrong in my Puma config here. While Puma and Thin get some advantage from higher concurrency, it’s not a large advantage. And I’ve measured a much bigger benefit for that before using Puma, in my RRB testing. I could speculate on why Puma didn’t do better, but instead I’m going to get a better load-tester and then debug properly. Expect more blog posts when it happens.

I hadn’t found Passenger’s guide to benchmarking before now - but kudos to them, they actually specifically try to shoo people away from ApacheBench for the same reasons I experienced. Well done, Phusion. I’ll check out their recommended load tester along with the other promising-looking ones (Ruby-JMeter, Locust, hand-rolled.)

Conclusions

Here’s something I’ve seen before, but had trouble putting words to: if you’re going to barely configure something, set it up and hope it works, you should probably use Passenger. That used to mean a bit more setup because of the extra Passenger/Apache setup or Passenger/NGinX setup. But at this point, Passenger standalone is fairly painless (normal gem-based setup plus a few Linux packages.) And as the benchmarks above show, a brainless “do almost nothing” setup favors Passenger very heavily, because the other app servers tend to need more configuration.

I’m surprised that Puma did so poorly, and I’ll look into why. I’ve always thought Passenger was a great recommendation for SREs that aren’t Ruby specialists, and this is one more piece of evidence in that direction. But Puma should still be showing up better than it did here, which suggests some kind of misconfiguration on my part - Puma uses multiple threads by default, and should scale decently.

That’s not saying that Passenger’s not a good production app server. It absolutely is. But I’ll be upgrading my load-tester and gathering more evidence before I put numbers to that assertion :-)

But the primary conclusion in all of this is simple: ApacheBench isn’t a great benchmarking program, and you should use something else instead. In two weeks, I’ll be back with a new benchmarking run using a better benchmarking tool.